Transformer组件的各种变体

Difference:

- LayerNorm is in the front of the block

- Rotary position embeddings(RoPE)

- FF layers use SwiGLU,not ReLU

- Linear layers(and layernorm) have no bias(constant)terms

Pre norm is better than post norm

- Original transformer:LayerNorm after residual stream.First you do Multi-Head attention , add back to the resdual stream ,and then layernorm.You will do the same thing with the FFN layer.

- Use post norm is much less stable,which means you have to do some careful learning rate warm-up style things(reference the previous large model fine_tuning I did) to make it train in a stable way

- Now the layernorm is in front of the residual stream,which did much better in many different ways.(almost all modern LMs use Pre norm)

- Using pre norm we can remove warm up and it works just as well as post norm with some other stability-inducing tricks,even better

- Pre norm iss just a more stable architecture to tain.Today pre norm and other layer norm tricks being used essentially as stability-inducing

- New-things:double norm(pre norm with post norm)

Why is layer norm in the residual bad?

- Residual gives identity connection all the way from almost the top of the network all the way to the bottom,this makes gradient propagation very easy when you train models,if putting layer norm in the middle,might mess with that kind of gradient behavior.(梯度在深层反向传播,容易造成梯度消失)

LayerNorm vs RMSNorm

- LayerNorm

- RMSNorm

- Many models have moved on to RSMNorm,which is faster and just as good as LayerNorm

- RMSNorm has fewer operations and fewer parameters

- Flops:Tensor constraction is 99.8%,Stat.normalization is 0.17% ,but for runtime,Tensor constraction is 61%,Stat.normalization is 25.5%.Because tensor constraction is dense computation,Stat.normalization operations incur a lot of memory movement overhead.

- We not only think about FLOPs,but also memory movement.Optimize this is matter.

More generally:dropping bias terms

- Most modern transformers don't have bias terms,it performs as well as before and makes train more stable

Activations

ReLU

GeLU

- GeLU在x=0处是平滑的

GLU 门控线性单元

- $ \otimes$是逐元素乘法

- xV是输入依赖的门控,V是固定门控,如果仅仅使用V,门控信号是固定的,与输入无关,无法根据不同输入特征调整筛选策略

- ReGLU

- GeGLU

- SwiGLU

- swish is x*sigmoid(x)

Serial VS Parallel layers

- layernorm和attenyion并行处理

- 已经不再流行,多数模型还是使用串行架构

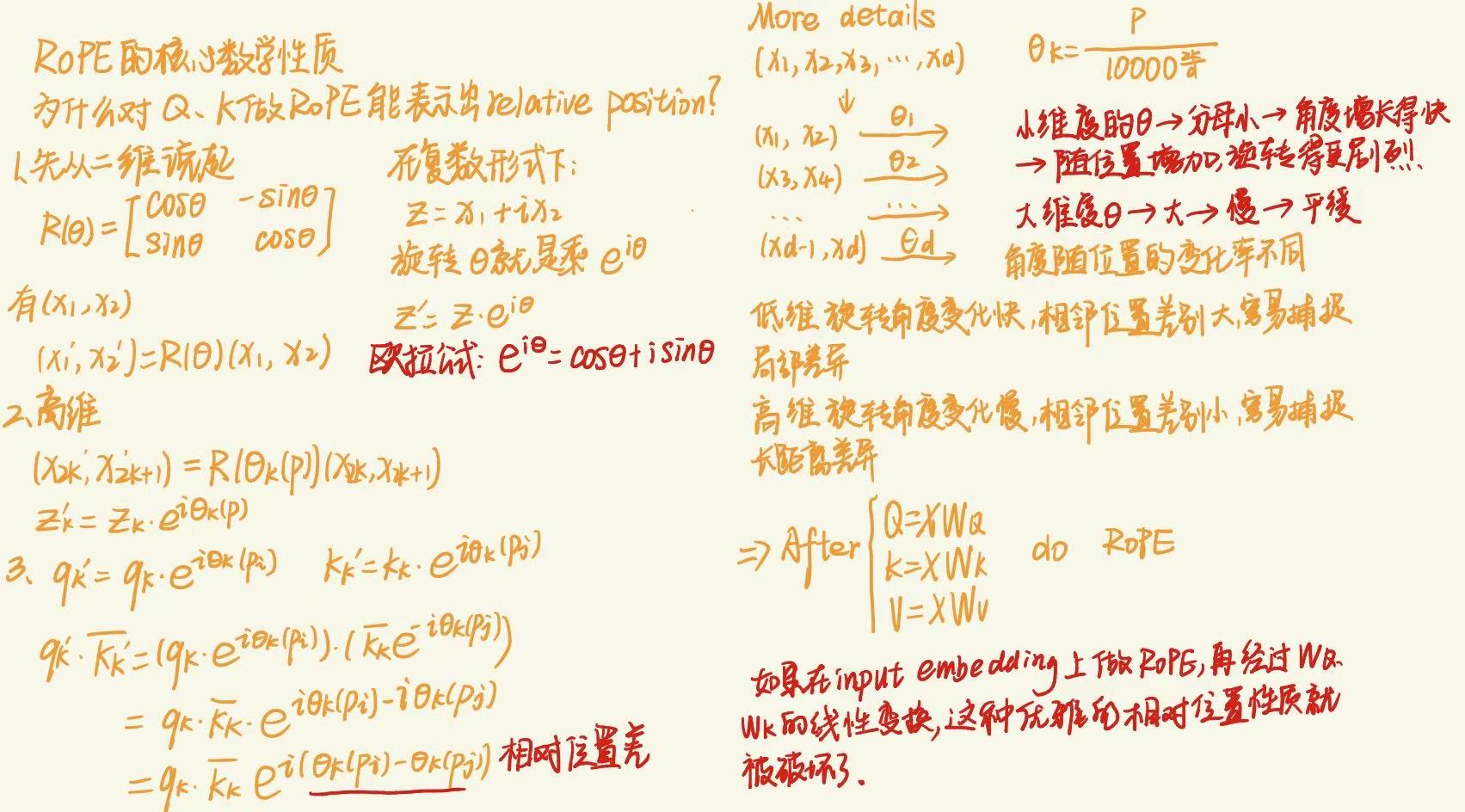

RoPE:rotary position embeddings

Hyperparameters

Feedforward size

- Feedforward layer的隐藏层的维度

- 每个token被表示成一个大小的向量 经过每层都保持这个大小

- $ d_{ff} = 4 d_{model} $,这是业界普遍的共识

- 例外1:GLU variants scale down by 2/3,which means most GLU variants have $ d_{ff} = 8/3 d_{model} $ 2至6倍

- 例外2:T5 64倍 后来T5更新换代使用了2.5倍的GLU

Model dim & Num heads & Head dim

- head dim 太小,每个头视野太窄,捕捉不到复杂的关系;太大,每个头很宽,但是头数少,可能缺乏多样性

- 大多数模型 radio=head dim×num heads / model dim 通常等于1,但有些模型不等于1

Aspect ratios 宽高比

- 业界普遍使用的128

Typical vocabulary size

- Token count 通常在10万到20万之间

Dropout and other regularization

- 预训练时,从直觉上来说,不需要权重衰减,但是实际上会做,原因:已经超越了“防止过拟合”这个传统概念,而更多地与优化过程本身的稳定性、收敛性和泛化能力的提升密切相关。

训练稳定的一些技巧

Z-Loss

QK norm

Logit soft-capping

这里不再详细叙述 直接问ai即可,它解释的很好